SpaceX Orbital Data Centers

Orbital AI is the key to the high frontier

SpaceX uses AI across a broad range of applications, to reduce personnel workload and increase productivity. While Elon Musk was once opposed to AI, he has now committed to explore its full potential.

“I resisted AI for too long. Living in denial. Now it is game on. @xAI @Tesla @SpaceX” ~ Elon Musk/X

Currently SpaceX has relatively minor compute power compared to big players like OpenAI, Amazon and Google, although they can rent computer time from Tesla and xAI. Intriguingly SpaceX intend to fill this gap with their next generation of Starlink satellites, and effectively create the first orbital data center.

“[There has been a lot of debate about the viability of data centers in space.] Simply scaling up Starlink V3 satellites, which have high speed laser links, would work. SpaceX will be doing this.” ~ Elon Musk/X

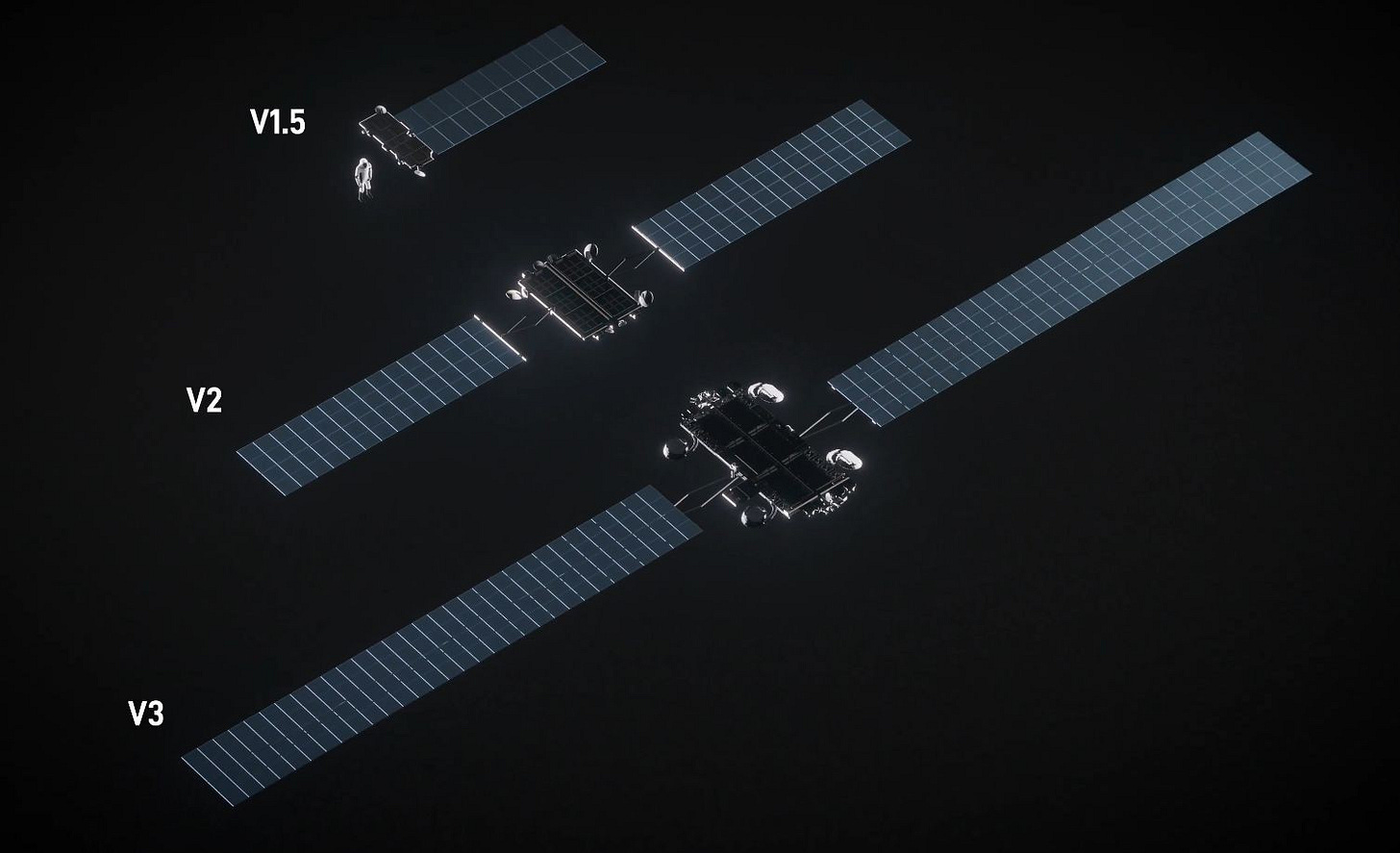

Each Starlink V3 satellite can download 1 Tbps and upload 160-200 Gbps, so has considerable compute capacity onboard. More significantly they intend to deploy ~30,000 V3 satellites, each equipped with numerous processors, which should provide comparable compute power to any AI center on Earth.

Challenges of Space AI

Normal tech companies find it challenging to build AI centers on Earth, so building one in orbit seems like pie in the sky to many. Certainly the challenges for orbital operations are steep: -

Heat dissipation – AI centers need to dissipate hundreds of megawatts of heat due to the high density of GPUs. This problem is compounded in space because heat conduction and convection are negligible in vacuum, so excess heat must be dissipated as infrared radiation. However, V3 satellites should be spaced 100-150 km apart which minimizes any concentration of heat. In addition each satellite is designed to dissipate all the heat it generates, which suggests SpaceX has essentialy solved the heat problem.

Computer coherence – coordinating the exchange of data between 30,000 satellites to avoid bottlenecks and packet loss seems an insurmountable problem. However, xAI managed to integrate 100,000 GPUs with good coherence at its Colossus center, and there are no Chinese walls between Elon’s companies.

Transmission lag – the distance between satellites will introduce an unavoidable delay in data transferal. No doubt SpaceX plan to operate many satellites in parallel then concentrate the results to minimize any delay in transmission.

Power consumption – the current V2 Starlink satellites use AMD Versal AI Core processors which consume ~80 W for inference work. However, SpaceX has recruited engineers to develop cutting edge silicon for the V3 Starlink system. No doubt their own brand processors will outperform the Versal AI chip, with equal or less power consumption, allowing each satellite to have more than double the processing power of V2 satellites.

Power generation – solar panels produce up to 40 times more power in space than on Earth, due to increased light intensity and near continuous illumination. The 52.5 m2 panels used by V2 satellites produce ~75 kW, which suggests the 105 m2 panels used on V3 satellites should generate ~150 kW, possibly higher if they manage to improve conversion efficiency.

Constellation capacity – Starlink satellites are designed to operate at peak capacity, typically when they cross coastal areas, where users are concentrated. Hence these satellites have spare capacity when they cross oceans, deserts, the poles and low density interiors, which is more than 90% of their orbit. This implies a constellation of 30,000 satellites has huge idle capacity, ready to be tapped for AI applications.

Constellation finance – funding 30,000 satellites seems an impossible task for any company – except SpaceX. They have already launched 10,000 Starlink satellites and the operation is now self-funding due to huge demand and little competition. Note, launch cost is historically low for Falcon 9 and should reduce further with Starship due to improved propulsion economics.

“[Starship’s] Raptor 3 will probably be 2 to 4 times better than [Falcon’s] Merlin in $/ton of thrust and will exceed Merlin in Thrust to Weight Ratio. Raptor 4 should beat Merlin by >10X in $/ton of thrust, with further improvement in TWR and ISP.” ~ Elon Musk/X

Overall orbital data centers seem an achievable goal, at least for SpaceX with its inherent technical and cost advantages. Achieving Artificial General Intelligence (AGI) should be possible, with Artificial Superintelligence (ASI) as a stretch goal for the company.

Succinctly: Starlink will become a mother AI.

Daughter Data Centers

Generally SpaceX like to trial on Earth the systems they need to settle other worlds, like Starlink AI. When ready, they could create daughter AI systems anywhere in the inner solar system, using only a handful of Starships. Each Ship can carry 60 satellites, so it shouldn’t take long to deploy rudimentary systems around other worlds, starting with the moon and Mars. Ideally these orbital systems would be deployed before settlement begins in earnest, so they can monitor landing sites and provide GPS to descending spacecraft.

Later on, as the daughter system grows, it could manage settlement communications, space traffic and surface operations. Essentially settlers would have maximum support from the moment they arrive, and avoid any delay from setting up data centers on the surface.

Succinctly: Starlink is a plug and play AI for the high frontier.

Baby AI

While each Starship has sufficient compute power to operate independently, they could be interlinked to increase their compute capacity. SpaceX want to send hundreds of Starships to Mars during the month long transfer window, essentially creating an extended convoy. These ships will need to communicate data with one another, so each ship could become a computer node in a convoy wide network. Fortunately SpaceX has already tested laser communication on the Polaris Dawn mission, which used a Dragon spacecraft to link with the Starlink network. Given this success, laser communications will likely become the norm between spacecraft due to its high bandwidth, lower power use and improved security.

In Conclusion

As you might expect, SpaceX is plotting its own unique course to AI, that exploits orbital infrastructure. Going forward, space AI will particularly benefit the colonization process, both on transport ships and colony worlds.

Our own world will be to the first to benefit, once SpaceX begin deployment of Version 3 Starlink. No doubt this constellation will become a communications and computing hub for all space activity, arriving just in time for our new space era.

Watched Elon on Joe Rogan the other day, talking about his flying car. "It looks like a car, but it's not a car. The technology in there is next level". No wings, no rockets, not propeller. What is the technology? Claim's a demonstration either before the end of the year or early next year.

Is this a technology that could be used in space drives?